The AI Act appears to make a clear distinction between “provider vs deployer”. In practice, however, there are some tricky questions of detail. Special features exist, for example, in the context of the AI value chain and the individualization of AI chatbots. An article including a checklist for differentiating between “provider vs deployer” of AI Chatbots.

- The “provider vs deployer” distinction only applies to AI systems, not GPAI models.

- Most obligations justify high-risk AI systems for both roles.

- However, the differentiation of roles is particularly important for AI chatbots due to their frequency.

- The implementation within the AI supply chain can play a role here. The same applies to the use of artificial names for and the individualization of AI chatbots.

- At the end, there is a conclusion with four takeaways. There is also a checklist with a workflow for AI chatbots.

Articles of the EU AI Act mentioned in this post (German):

- Article 3 EU AI Act

- Article 4 EU AI Act

- Article 6 EU AI Act

- Article 25 EU AI Act

- Article 50 EU AI Act.

Please note that the original text of this article is based on the official German translation of the EU AI Act. Its wording may differ in part from the English version of the EU AI Act.

Relevance and legal consequences regarding “provider vs deployer”

The EU AI Act differentiates between the two (main) roles of AI systems:

- the provider (Article 3 No. 3 EU AI Act) and

- the deployer (Article 3 No. 4 EU AI Act).

In practice, however, the distinction between the two roles is not always as simple as one might assume. For this reason, there are already a number of publications from law firms and organizations (see list at the end of the article). Above all, it seems clear that there are demarcation issues.

However, some details remain rather unclear. One of the reasons for this is that the term “operator” was only added in a later version of the EU AI Act. Previously, the role was referred to as “user” with almost the same definition. In the final version, “user” is a variant of “operator”:

A particularly important case in practice for differentiating between “provider vs deployer” is the AI chatbot. This topic is given special attention in this article. The checklist including the following workflow for differentiating and determining both roles for AI chatbots can be accessed here.

1. Legal consequences of the distinction

The differentiation between “provider vs. deployer” must already be taken into account when planning AI systems, their distribution and procurement, because

Providers are fully responsible for the conformity of the AI system with the legal requirements.

Deployers use AI systems in everyday life and are responsible for their proper use and safe operation.

The effects in terms of the costs and resources required are also important. Being a provider of an AI system is significantly more complex and therefore generally more resource-intensive and expensive than operating it.

1.1 Wording of the EU AI Act

First of all, the most important definitions in Article 3 EU AI Act:

For the purposes of this Regulation, the following definitions apply

No. 3: ‘provider’ means a natural or legal person, public authority, agency or other body that develops an AI system or a general-purpose AI model or that has an AI system or a general-purpose AI model developed and places it on the market or puts the AI system into service under its own name or trademark, whether for payment or free of charge;

No. 4: ‘deployer’ means a natural or legal person, public authority, agency or other body using an AI system under its authority except where the AI system is used in the course of a personal non-professional activity

No. 9: ‘placing on the market’ means the first making available of an AI system or a general-purpose AI model on the Union market;

No. 10: ‘making available on the market’ means the supply of an AI system or a general-purpose AI model for distribution or use on the Union market in the course of a commercial activity, whether in return for payment or free of charge;

No. 11: ‘putting into service’ means the supply of an AI system by the provider for first use directly to the deployer or for own use in the Union for its intended purpose;

In the following, the obligations of both roles in the context of business. use are presented. It then explains how the roles in the AI value chain can be determined or differentiated from one another.

1.2 Provider: For AI system and GPAI model

With regard to the role of the “provider”, a distinction must be made between two types of obligations:

- those for AI systems within the meaning of Article 3 No. 1 EU AI Act and

- the obligations for general purpose GPAI models within the meaning of Article 3 No. 63 EU AI Act.

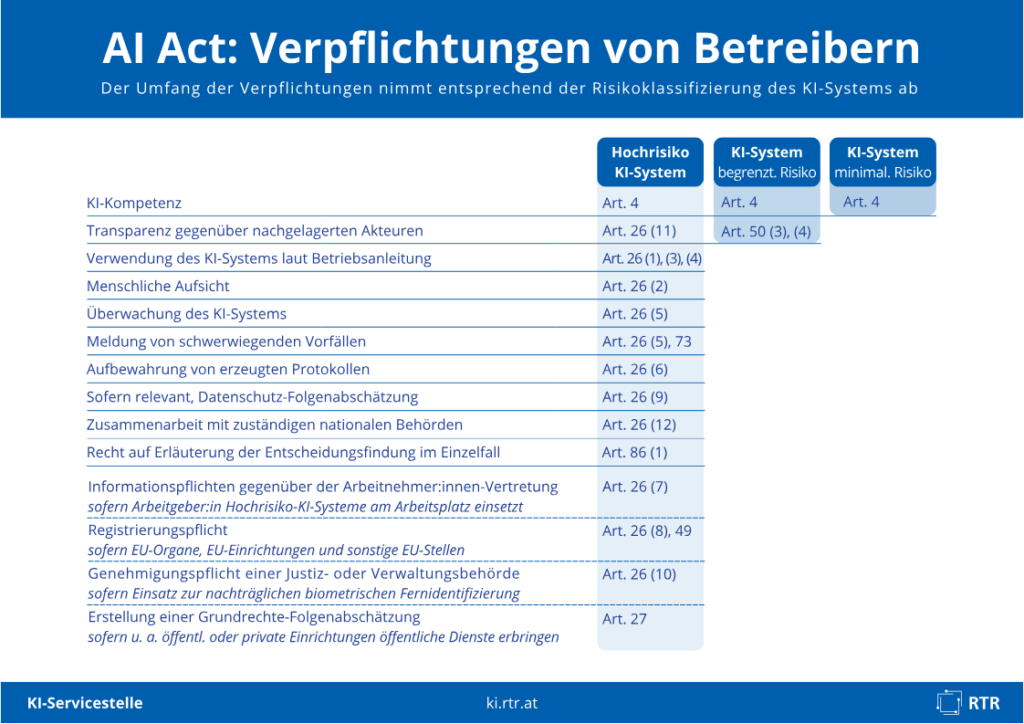

The following RTR overview illustrates the obligations that the role of “provider” potentially entails (German only).

As you can see, most of the obligations for providers are for high-risk AI systems within the meaning of Article 6 EU AI Act (left-hand column). However, there are also provider obligations for medium and low-risk AI systems (the right two columns).

For all variants of AI systems and GPAI models, the objective of imparting AI literacy within the meaning of Article 4 EU AI Act / Article 3 No. 56 EU AI Act must be observed. However, this is only an indirect obligation. Failure to implement the obligation cannot be sanctioned. However, breaches of Article 4 can be taken into account in sanctionable obligations, e.g. in the amount of fines.

See also this article on the topic of “AI competence: mandatory or voluntary” (so far only German):

This article primarily deals with the question of “provider vs deployer” for AI systems within the meaning of Article 3 No. 1 EU AI Act. The need for demarcation does not exist in the case of GPAI models with a general purpose. Nevertheless, it makes sense to realize that the role of the provider is quite exposed overall. This is because providers of AI systems are also “downstream providers” of the GPAI models they contain! This means that all obligations in the overview are potentially relevant – especially for AI chatbots.

Incidentally, the first version of the EU AI Act of 2021 still provided for the role of the “small provider” (Article 3 No. 3 EU AI Act old version). This role has been removed in the final version.

1.3 Deployer: AI systems only

In the role of the deployer – unlike that of the provider – there are – only obligations with regard to AI systems. Once again, the obligations for high-risk AI systems within the meaning of Article 6 EU AI Act are in the foreground here. However, the obligations for medium-risk AI systems that can create synthetic media (Article 50 EU AI Act) must also be taken into account. There is also an obligation to impart AI expertise (Article 4 EU AI Act).

With regard to the term “deployer, it should be noted that this role was still referred to as “user” in the first version of the EU AI Act of 2021 (Article 3 No. 4 EU AI Act old version). This shows how the interaction of different roles and terminology has changed up to the final version.

1.4 Contract is not decisive

It is certain is that contracts must be concluded between providers and operators that establish mutual rights and obligations. Recommendations on this can be found in the literature below. However, it is important that the contract concluded between providers and operators does not influence the actual role (according to the view expressed here and also by miscon). This means that the role of the provider cannot be contractually transferred to the operator contrary to reality. The de facto role is decisive within the meaning of the EU AI Act.

The miscon article also rightly points out that a “self-assessment” of the question of own provider vs. deployer status should always be treated with caution! Even if it causes “pain”, an external opinion should always be consulted, which in case of doubt will assess the view of a supervisory authority more objectively and less optimistically than you yourself.

2. Key practical example: AI chatbot

High-risk AI creates the most obligations for both roles. In practice, however, the AI chatbot is probably the most common case with regard to the distinction between “provider vs. deployer”. Up to 40% of all companies already use AI chatbots – for business purposes in the context of one of the two (main) roles!

AI chatbots are used for both internal and external use cases, including

- for interaction with customers, partners, citizens and employees

- as an intelligent search engine

- to create texts, images, audio and video files

- to generate ideas

- etc.

In many respects, the following content can also be applied to other use cases and also to high-risk AI. However, there are differences in detail (in the case of high-risk AI due to the interaction with other regulations and directives such as the Medical Device Regulation, MDR or the Cyber Resilience Act, CRA).

2.1 Overview on AI Chatbots

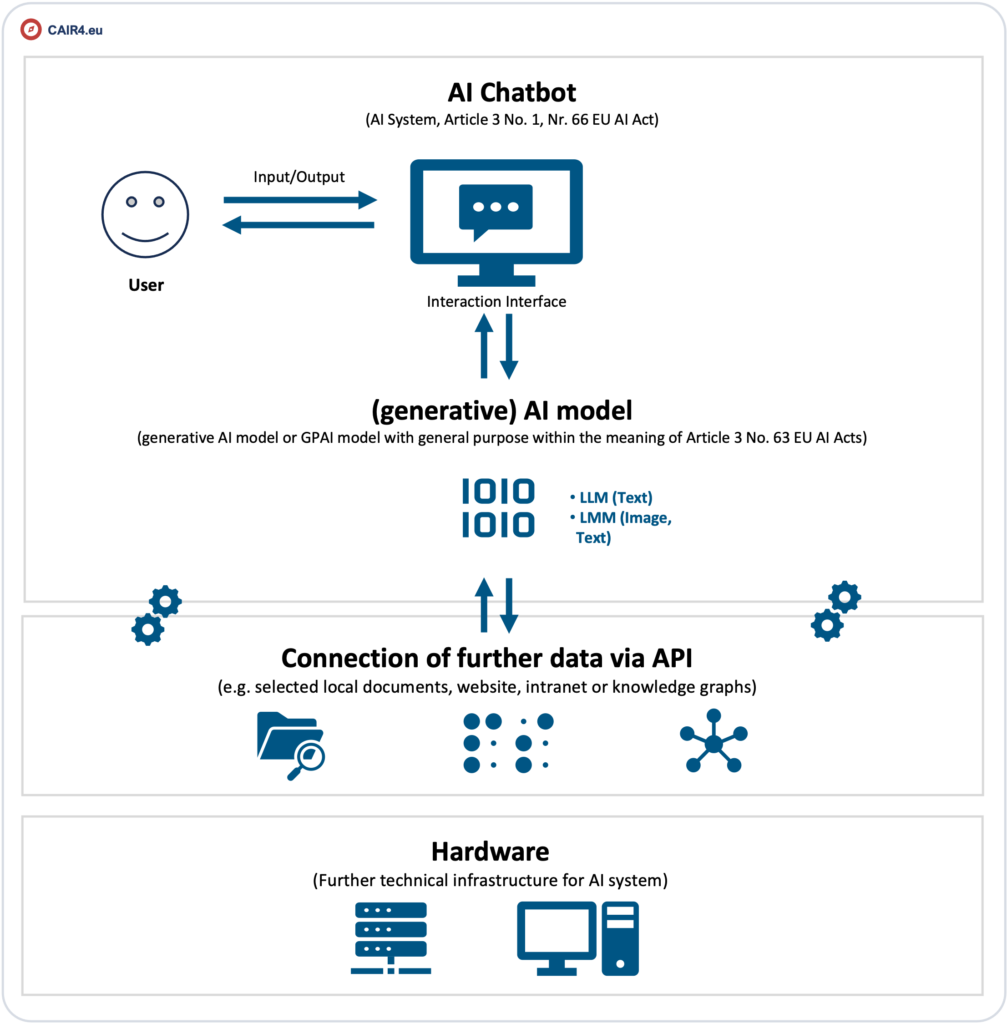

First, a rough technical overview of the typical structure of an AI chatbot:

This overview makes it clear that an AI chatbot consists of different elements. The individual customization of these and other elements can lead to the differentiation between provider and deployer becoming less clear than one would expect at first glance. Various data can be added, the language can be fine-tuned or knowlegde graphs can be connected in different ways.

2.2 Implementation variants

In this respect, the question of whether and how the AI chatbot is operationally implemented and customized as part of the AI value chain plays an important role in the “provider vs deployer” distinction.

Three implementation options are particularly relevant here:

- A company can build or have its own AI chatbot based on any AI model – in which case it is always the “provider” of the AI system. If it uses it itself, it is also the “deployer. This is important because of the obligations under Article 50 (1-4) EU AI Act. There is then a dual role!

- The AI chatbot can be sold directly by its manufacturer (e.g. ChatGPT, google gemini, neuroflash or MS Copilot) as a business solution in the role of “provider”. If the chatbot is used 1:1 by the customer, the distribution of roles is also clear. The user of the AI chatbot is the “deployer”. It does not matter whether it is an “AI as a service” variant or an “on premise” solution.

- Another common variant is that an AI chatbot (e.g. ChatGPT, google gemini or MS Copilot) is hosted by its manufacturer, an IT company or a data center on dedicated servers and then passed on (in its own name) to the “deployer” for a fee, see e.g. (see e.g. Business GPT, comGPT or GoodGPT).

- Advantage 1: Use is then usually on a specially protected instance.

- Advantage 2: The variant offers AI chatbot and additional services “from a single source”.

- Advantage 3: In addition, individualizations of an AI chatbot can be implemented in a tailor-made manner (e.g. fine-tuning or complex adjustments to processes and user roles).

Compared to reality, the three variants are a “high-level overview”. In the context of the AI value chain, significantly more complex variants are possible, e.g. if other AI systems or several different AI models are integrated in an AI system – this is probably also how No. 97 of the recitals sees it.

2.3 Value chain

Differentiating between the roles of “provider” and “deployer” is made particularly difficult in practice by the third variant – this is where the value chain comes into play, which is outlined for high-risk AI systems in Article 25 EU AI Act, among others. However, a value chain also potentially exists for medium and low-risk AI systems – not least for AI chatbots.

In variant 3:

- a legal relationship with originally two actors becomes a legal relationship with three or more actors within the meaning of the EU AI Act.

- If the AI chatbot is then individualized, the question of “provider vs deployer” becomes particularly tricky!

See also the following article on the constellation with three players:

3. “Provider vs deployer” and individualization

As already mentioned, there are several specialist articles on the distinction between provider and deployer (see overview at the end of this article). What the articles have in common is the basic distribution of roles, which is already apparent from the definition above. However, most of the articles define the providers across the board: For AI systems of all risk classes as well as for GPAI models – this makes it difficult to focus on the case of AI chatbots, which is particularly important in practice.

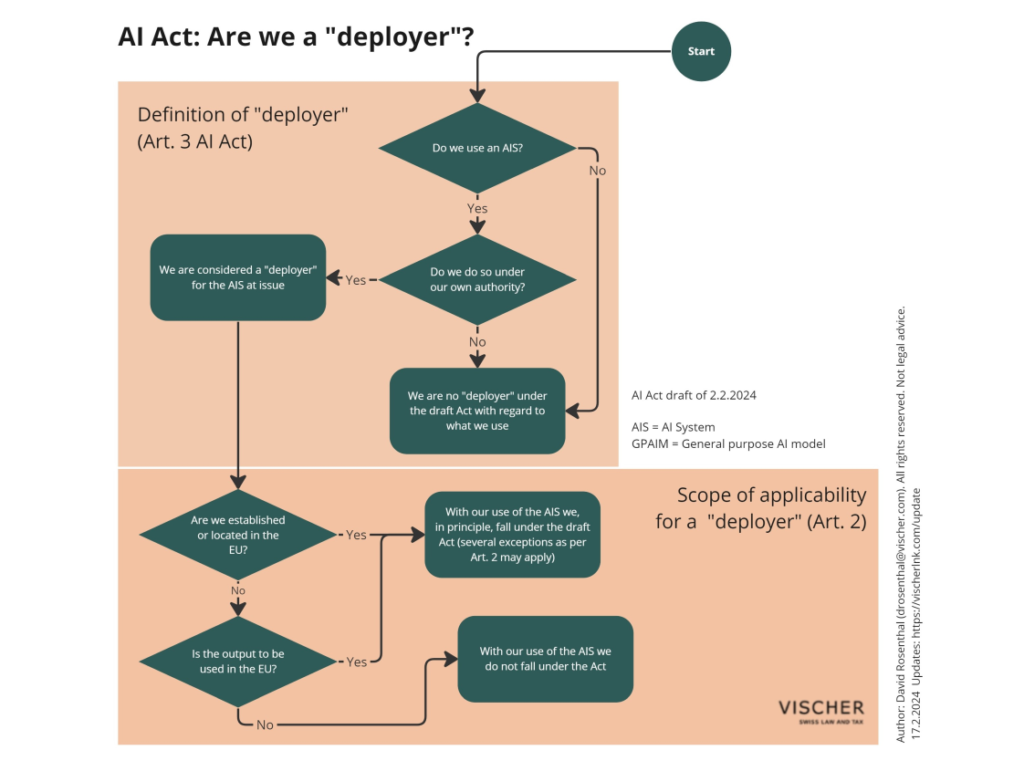

3.1 Provider vs deployer

Lets start with a tool. A workflow for determining deployer status can be found, for example, on the websites of Vischer:

However, the fact that you are an deployer does not mean that you are not (also) a provider. In this respect, a double check is required:

- whether you are a provider of an AI system and/or

- whether you are (also) its deployer.

In the case of AI chatbots in particular, the dual role can mean that you:

- in the role of provider, you have to fulfill the obligations under Article 50 (1) and (2) EU AI Act and

- in the role of deployer you would also have to fulfill the obligations under Article 50 (3) or (4) EU AI Act.

So if you want to create an AI chatbot yourself, customize an AI chatbot of other users and also use it yourself, you should have the “duty double pack” on your radar!

3.2 Individualization as a “threshold” to the provider

However, the specialist literature rightly agrees that when an AI system is individualized, there can be a change from the role of “deployer” to the role of “provider”. The following can be individualized

- the name of an AI chatbot on the market and

- the content and technical concept and

- the combination of both.

As things stand, however, it remains unclear what exactly needs to be individualized by whom in order for deployers of an AI chatbot, for example, to become its providers. One thing is certain, however: AI chatbots in particular can raise all three questions.

3.2.1 Use of brands for AI chatbots

Perhaps the most underestimated way to inadvertently shift from being an operator to a provider is to brand your own AI chatbot. This case is explicitly mentioned at the end of Article 3 No. 3 of the EU AI Act with the phrase “in its own name or under its trademark” – however, this only applies under the condition that the AI chatbot was developed inhouse or was developed by a third party. The own use is also emphasized – e.g. in the form of the internal use of an AI chatbot in the intranet. This is particularly important in combination with individualization, which can be interpreted as (further) development.

Please note: The following aspects are hypotheses! There is still hardly any specialist literature, official or court decisions on this subject. First and foremost, you should be aware that there is a relevant aspect to the topic of “provider vs. deployer that needs to be considered and clarified. The specialist article by Vischer speculates that the supervisory authorities could tend towards an interpretation that leads to provider status in case of doubt in the context of the aspects described below – but takes a different view itself. In view of the uncertainties, it is important to act with caution here.

At this point, the wording of the original version of Article 3 No. 3 and 5 of the old version of the EU AI Act should also be taken into account! It gives an indication of what the legislator originally had in mind:

No. 3 ‘provider’ means a natural or legal person, public authority, agency or other body that develops an AI system or that has an AI system developed with a view to placing it on the market or putting it into service under its own name or trademark, whether for payment or free of charge;

No. 5 ‘user’ means any natural or legal person, public authority, agency or other body using an AI system under its authority, except where the AI system is used in the course of a personal non-professional activity;

3.2.1.1 Art names and terms of art

Creative terms of art that combine the abbreviation “GPT” before or after with innovative additions are particularly popular with AI chatbots.

The abbreviation “GPT” stands for “generative pre-trained transformer”. Due to the popularity of ChatGPT, many organizations use creative GPT name variations. The aim is to make their own AI offering distinguishable, better known and more accessible, but also more trustworthy. Some AI offerings are then advertised internally and/or externally via their own URL and additional marketing materials and communication campaigns.

Typical examples of such names are

- GPT+[company name or name of organization] (and vice versa)

- [City or authority]+GPT (and vice versa)

- GPT+[fantasy name] (and vice versa)

- human-like names (“Oskar”, “Lucy” etc.)

As understandable as this step is, it could be disastrous in view of the wording of Article 3 No. 3 of the EU AI Act: Although it talks about “own name” and “trademark”, the transitions to general trademark law are fluid – especially in light of the fact that the major AI players form their AI trademarks according to a similar knitting pattern e.g.:

- “ChatGPT” (from OpenAI)

- “gemini” (from google)

- “Copilot” (from Microsoft)

- “Alexa” (from amazon)

- “Siri” (from apple)

Against this background, the use of “artificial names” for AI systems would be comparable to the use of an “artist’s name”: An artist can also act in his own name if he uses his artist name (see, inter alia, § 13 UrhbG). Translated, this means: What is important is whether an AI chatbot’s artistic name can be attributed to a specific company or organization.

The problem of naming is often reduced to high-risk AI systems in the legal literature because Article 25 (2) EU AI Act contains a provision that also refers to a company’s own name and trademark to establish supplier status. However, this is most likely only a declaratory clarification because Article 3 No. 3 EU AI Act already states the same for all (!) AI systems and GPAI models. The older versions of the EU AI Act hardly allow any other interpretation here either: This means that it is precisely not only high-risk AI systems that are affected by this regulation, but all AI systems – regardless of risk class!

According to the view expressed here, the mere use of an artificial name alone is probably not sufficient for providership. However, it should be noted that German law takes into account, among other things, the appearance of responsibility for content on the internet. Whether and to what extent this could also apply to AI would need to be clarified.

3.2.1.2 Registration is not necessary

Against this background, it is also worth taking a look at trademark law, § 3 (1) of the German Trademark Act (MarkenG):

A trademark serves to identify goods and/or services and thus enables customers and consumers to distinguish the respective products of a company from those of other companies.

It is precisely the aim of distinctiveness that makes the use of artificial names for AI chatbots so relevant with regard to formal supplier status. It is important to note that a trademark does not necessarily have to be registered in order to be considered a (trade) mark.

There are two types of trademark protection:

- Registered trademark:

- Advantages: Strongest protection, exclusive rights, easier to prove in legal disputes.

- Prerequisite: Successful application and registration at the relevant office (in Germany at the German Patent and Trade Mark Office – DPMA).

- Duration: 10 years, renewable.

- Unregistered trade mark (validity):

- Advantages: Arises from intensive use in business transactions, may also be protected in certain cases.

- Disadvantages: More difficult to prove, scope of protection often less.

- Prerequisite: The trademark must be recognized as such by the relevant public.

If a company, authority or organization wants to use an artificial name, it is always advisable to make it unmistakably clear when calling up the AI chatbot that it is only a “packaging” – but that the “content” of the AI chatbot comes from another provider. If a URL were used, a redirect could also serve to clarify this: E.g. in which the URL “GPT+[fantasy name]+.com” links to a subURL of the actual AI provider. Either way: Especially when internal and/or external marketing and campaign documents are created and distributed for an AI chatbot, it should always be pointed out that the technology behind it comes from another provider.

3.2.2 AI service offerings

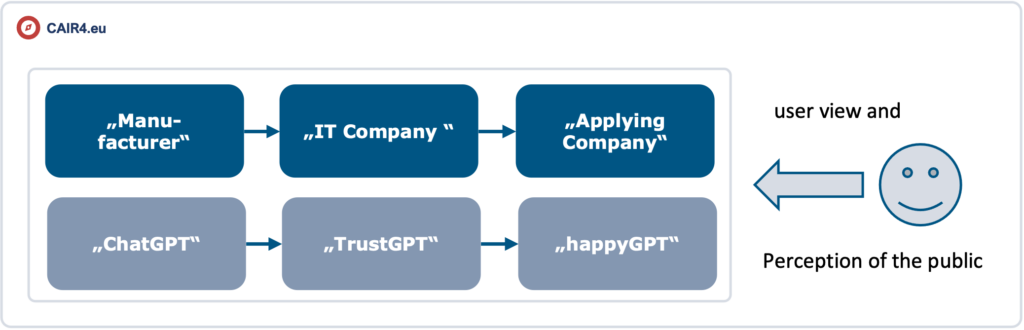

If an AI chatbot based on common GPAI models is offered on the market by an IT company or a data center on its own servers (with additional services) under an artificial name, then it is almost certainly a provider (see e.g. Business GPT, comGPT oder GoodGPT).

If these variants are regarded as providers, it is all the more difficult to draw the line between them and cases in which the corresponding offers are offered on the market by the associated customers as user companies with an additional artificial name of their own.

If any user were to use an AI chatbot called “happyGPT”, which was purchased by an organization as a “TrustGPG” service from an IT company and an AI model from OpenAI is used in this service (which can easily be confused with the AI system “ChatGPT”): Who should then be the provider and/or deployer according to the public perception or from the user’s point of view?

If one assumes the protective purpose of the EU AI Act, which aims to prevent the misuse of AI and also grants the user rights of appeal under Article 85 EU AI Act, then:

- for questions of Article 50 (1) and (2) EU AI Act, i.e. disclosure of the AI and the machine-readable format, it must be assumed in case of doubt that the organization that appears to be responsible for the AI chatbot according to the public perception is to be regarded as the provider within the meaning of the EU AI Act.

- For questions of Article 50 (3) and (4) of the EU AI Act, i.e. deepfakes and other manipulation, the public perception is also decisive for determining deployer status.

3.2.3 Customizing of AI chatbots

As outlined above, the customization of an AI chatbot, e.g. its fine-tuning or the addition of data and/or knowledge graphs, should be considered particularly relevant. Depending on how you look at it, this customization can be considered development or contract development. The latter in particular in combination with the constellation outlined in section 3.2.1.3.

Vischer takes the following view in this regard: “Accordingly, a fine-tuning of the model of an AI system would be covered, whereas the use of ‘Retrieval Augmented Generation’ (RAG) would not.” Haerting takes a similar line. However, it is important to check exactly what is considered fine-tuning and what is not. You can find overviews of this at wikipedia, businessautomatica or datascientist

The addition of own (training) data or the combination with knowledge graphs (e.g. when generating the answer) or the combination of several AI models can also be an individualization according to the view represented here, which leads to a provider within the meaning of the EU AI Act.

The very concept of personalization, individualization or customization of AI suggests that there are countless variants of it, the effects of which should always be examined on a case-by-case basis to determine whether the threshold from deployer to provider is crossed. If a chatbot combines a customizing feature of any kind with an artificial name for the result (see point 3.2.1.1 above), the air becomes thinner and thinner in case of doubt – provider status becomes more likely! Finally, it must be borne in mind that the protective purpose of the EU AI Act is consumer confidence. And a consumer or a person interacting with an AI chatbot must ultimately be able to somehow answer the question of which actors they should trust when using an AI chatbot.

4. Conclusion and four takeaways

1. In general, the demarcation is only relevant for AI systems:

- Providers develop and make AI systems available on the market and are responsible for the conformity of the system with the legal requirements.

- Deployers use AI systems in everyday life and are responsible for their proper use.

- Both characteristics can coincide: An organization can be both a provider and an deployer,

2. Different obligations arise from the roles:

- Common duties for providers and deployers arise from Article 4 EU AI Act with regard to the provision of AI competence.

- Providers and deployers have a number of special obligations in the case of high-risk AI.

- In the case of AI chatbots, providers have the obligations of Article 50 (1) and (2) EU AI Act.

- Deployers have those of Article 50 (3) and (4) EU AI Act.

3. The distinction between “provider vs deployer” is particularly important in practice for AI chatbots:

- The allocation of roles appears to be unproblematic in cases where an AI chatbot is produced by itself or on behalf of others.

- The case of the obvious 1:1 operation of a third-party provider’s chatbot also appears to be unproblematic.

- If the chatbot is offered as a separate service in the AI value chain by IT companies and data centers, this generally makes the companies providers within the meaning of the EU AI Act.

4. Particular caution with AI chatbots that are used as a service on websites, among other things:

- It is not easy for end users to differentiate the roles from a traffic perspective.

- In this respect, the two factors of individualization and the use of artificial names must be viewed particularly critically with regard to the threshold for provider status.

- In these cases, it is conceivable that provider and deployer roles on the part of the website deployer will coincide.

Links to the articles of the EU AI Act mentioned in this post (German):

- Article 3 EU AI Act

- Article 4 EU AI Act

- Article 6 EU AI Act

- Article 25 EU AI Act

- Article 50 EU AI Act

Checklists and Workflows:

Further articles on the topic:

- SKWSchwarz

- vischer.com

- haerting.de

- linkedin.com

- Verfassungsblog

- lw.com

- mishcon.com

- computerwoche.de

- cancom.at

- rtr.at

About the Autor:

Be First to Comment